It would be great to have more automatic set reconstruction by using, LIDAR, 123D Catch, Photosynth, or other point-cloud based method. But that is not always possible due to access to the location, time, money, and patience. Also many times you really only need basic layout of the area, such as floor, wall, and one major light source. For these cases we can manually rough out a 3D model of the scene in our panoramic image and map the geo with that HDR.

All credit for this scene and lesson to Christian Bloch and The HDRI Handbook 2.0

Make sure you Maya gird is set to meters so we are working in real world scale.

Start with a nurbs sphere, increase spans from 4 -> 8, so we can flatten top and bottom.

We will shape a big disk/cylinder type shape and project our pano inside there.

When flattening the top and bottom, use move tool options, turn off “Retain component spacing”

In your panel go to shading -> interactive shading = off, to prevent wireframe while moving cam.

In the attribute spreadsheet, filp normals in render tab so we can see the inside of the disk.

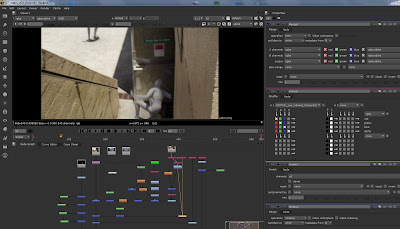

Apply a surface shader with spherical projection of our panoramic image.

Check for backward text, you may have to horizontal flip the image, in shader Uscale -1.

Here is a great slide presentation in PDF format from Ben Snow at ILM presented at Siggraph 2010 which describes using their software Ethereal for extracting lights from a pano and how they would use basic set reconstruction with HDR textured on for lighting Iron Man 2.

Must place spherical projection center at same height the panorama was shot from, 1.75m.

Next estimate the ceiling height to be 4.7m, and make the thing big, about 40m diameter.

1) bake the spherical projection into a UV map, so we can map with surface shader

When you project and create the new UV maps for geo, make it spherical from exact same point as the original spherical projection is from, so you can switch back and forth.

2) the columns and boxes could be planar projected and adjusted in the UV window.

The back walls will need to have UV’s normalized to fit, may also have to quad the triangles around the ugly nadir, this stuff could be easier in Modo or other modeling program.

2) separate the projected pano into foreground and background, also two shaders

3) use photoshop or other paint program to fill in or re paint occluded areas

4) select a column you don’t want in bg, delete, fill with content aware fill, photoshop

If you get this error using exr files as textures, just convert them to hdr format with Nuke.

// Warning: Failed to open texture file d:/class_fxphd/project_fxphd01/sourceimages/class10_panorecontruct/subway-lights_aligned.exr //

The EXR format will actually work in the render, but it gives this error anyways.

Once you have the environment geometry textured, you will now be lighting with primarily Final Gather. To get better shadows you could place an area light at the locations of light in the scene. Another option to get direct lighting and shadows is to have one render layer that swaps out your geo environment with an IBL same map, then turn on Environment Lighting Mode. This is slow to render, but would only be used for shadows landing on the ground caught with mip_matteshadow.